Few of the first physicians to settle among 17th-century colonists arrived in North America with a medical degree or any previous study at a European university. Typically, they had learned on the job, apprenticed to practicing physicians for a fee. (The profit motive fed the apprenticeship or preceptorial system just as it would drive the proprietary schools yet to come.) Some early practitioners did not possess even that much formal training, but instead developed “healing skills” amidst the harsh realities of a frontier existence. These forefathers became the first generation of medical teachers on this side of the Atlantic. Through the apprenticeship system, they imparted their limited understanding of health and disease, and their limited skills for treating the latter. After three or four years of shadowing a preceptor in his practice, and perhaps reading scarce medical texts, a pupil could nail his shingle to a door. In all likelihood, he would be only as good as the physician who trained him.

By the mid-1700s medical students and physicians with means could supplement their medical education abroad in Europe, particularly Edinburgh or London, attending universities, independent anatomy schools and/or private lecturers. Oxford University taught medicine by the 13th century; Cambridge University started its course in 1540. But even by the 18th century, this instruction was theoretical without practice. Alternatively, London hospitals offered medical instruction that was practical, without theory. The roots of scientific medical education go back to 16th-century University of Padua, eclipsed by the University of Leyden in the 17th century. Medical students from the United Kingdom studied on the continent until the University of Edinburgh opened a medical school in 1726 with faculty who had trained at Leyden.6 The University of Glasgow started its medical school in 1751. Scottish medical schools understood the need for linking didactic and clinical instruction. Each affiliated with a Royal Infirmary. The Scottish system would become the model for the University of Pennsylvania and other American medical schools.

Earning a medical degree required taking the same course of lectures for two years, which would be the norm until the 1870s. The repetition started out for practical reasons. “This was due to the fact that a limited number of books and equipment and the scarcity of trained professors of necessity made it impossible for each pupil to cover the field adequately in a shorter term.”9 Two years of hearing the same lectures theoretically gave students a second chance to absorb the quantity of information delivered through purely didactic instruction. One wonders if it would not have been better to divide the material over the two years, perhaps Materia Medica 1 and Materia Medica 2. That the course structure prevailed well beyond its practicality speaks to the resistance to change that characterized so much of American medical education prior to the 1870s.

Schools with access to hospitals typically required one year’s attendance in clinical lectures, on the wards and observing “public” operations. For Penn medical students, this happened at Pennsylvania Hospital (43, 44), founded in 1751 as the nation’s first such institution, and the Philadelphia Alms House (3). Harvard medical students attended Massachusetts General Hospital and the Boston City Hospital (7). Some schools also operated their own dispensaries to foster clinical instruction. These were essentially opportunities for eyes-on rather than hands-on clinical training.

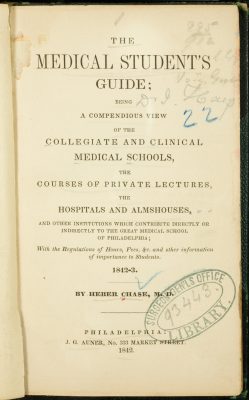

In some locations medical students had opportunities for extramural medical education in anatomy schools (48), private lectures and practical instruction (49-53) and dispensaries. By 1840 Philadelphia supported three medical schools: University of Pennsylvania (9-13, 66-96), Jefferson Medical College (29-32), and the Medical Department of Pennsylvania College. The city already boasted about 25 percent of all the nation’s medical students before the Pennsylvania College opened in 1839!10 To help these students navigate a cornucopia of options for extramural education, Heber Chase, MD (27) compiled a comprehensive student guidebook in 1842 (figure 6).11Within the nation’s medical schools many apprentices and practicing physicians attended lectures alongside medical students without matriculating. The system of lecture tickets made this possible and desirable. With roughly 10 to 15 dollars in hand, anybody could purchase admission to a course of lectures directly from the professor, who profited directly from the students’ fees. At the University of Virginia and the University of Michigan the medical faculty received salaries since the founding of their respective medical schools in 1825 and 1849. To some degree at Harvard, where an endowment for medical professors preceded the medical school itself, professors received salary.12 But the vast majority of faculty in American medical schools depended upon ticket sales for remuneration. The fees also defrayed expenses for equipment purchases and in some instances, rent. It therefore behooved professors to fill their lecture halls with any and all paying customers.

While the system of lecture tickets could be seen as democratic access to medical education during an era that espoused opportunities for the common man, it ultimately underserved the profession and the public. It became too easy to produce under-qualified physicians and too hard to consider changes to the curriculum, or entrance or graduation requirements that might threaten a professor’s popularity, and hence, his livelihood. In addition, professors received examination and graduation fees only if the pupil succeeded – a disincentive for withholding a diploma. Presumably reputable schools and professors upheld reasonable standards. But as a whole, medical education in 19th-century America stagnated while the number of medical schools increased dramatically until the Civil War intervened. Most of these burgeoning schools were proprietary. “Indeed, this form of medical school organization was the most distinctive feature of the American system and became a potent factor in determining the type of physicians that filled the ranks of the profession.”13 Some proprietary schools adhered to alternative forms of practice like homeopathy (26) and eclectic medicine (4, 18).

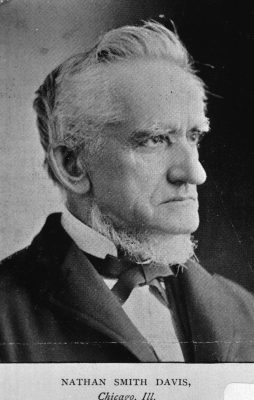

Lecture tickets represented just part of the problem with 19th-century American medical education. Regulatory agencies to oversee medical schools’ adherence to standards did not exist and many medical schools demonstrated laissez faire enforcement of their own requirements. They did not strictly adhere to three years of apprenticeship. Nor did they necessarily enforce attendance at lectures, and since standards for examinations – if required at all — were so lax, students could complete their degree requirements and have learned very little. Medical reformer Nathan S. Davis, MD (figure 7) warned of “those who have been cradled in the lap of luxury, who faint at obstacles, . . . who spend half their lecture terms in eating houses and places of amusement; or who leave the college when only three of the four short months have passed away.”14Reformers addressed these issues and advocated for longer terms of courses, inclusion of laboratory science in the curriculum and better clinical training. As things stood, newly graduated physicians delivered babies without ever having performed a physical examination on a woman. Civil War surgeons fresh out of medical school amputated limbs and performed other surgical procedures for the first time. Stalwarts, naysayers and self-preservationists eager to maintain the status quo rebuked voices for reform. Unless change were to be universal, a school and its faculty’s competitive advantage would be at risk.

Prior to the 1870s attempts at reform yielded little success. In 1813 as University of Pennsylvania faculty debated adding more courses to the curriculum, Benjamin Rush, MD proposed extending the medical course from two to three years, not to add more time for lectures, but rather for practical training with their preceptors. His proposal was ahead of its time.15 In 1827 delegates from New England and New York medical schools convened in Northampton, MA, to discuss reforms such as requiring three years of medical study after a bachelors degree. The schools never followed through. The Georgia Medical School instituted a six-month lecture term in 1830 and abandoned it five years later. In 1838 Ohio physicians assembled in Columbus where they recommended extending lecture courses by one month, instituting a graded curriculum with more provisions for preclinical (basic) sciences, and requiring standards for preliminary education. The following year the Medical Society of New York State proposed a convention in Philadelphia with delegates from each state medical society and each medical school. Only a few showed up.16

The New York society attained better results in 1846 when it organized the National Medical Convention, an effort spearheaded by Davis. At least 100 delegates from 16 states journeyed to New York City to discuss reforms. This group met again in 1847 in Philadelphia as the American Medical Association (AMA) and elected Penn’s Nathaniel Chapman, MD (72) its first president. Roughly 250 delegates from 22 states and the District of Columbia hammered out a list of resolutions calling for higher standards of medical education, including prerequisite college studies. To this list they added bedside instruction at the next convention. Davis articulated the AMA’s goal “to adopt such a system of college organization and rate of lecture fees, as will induce a far larger proportion of those who practice medicine, to first qualify themselves thoroughly for the responsible duties they assume to perform.”17 This statement recalls the inherent problems with the system of lecture tickets.

In 1857 the New Orleans School of Medicine introduced a system of bedside instruction whereby students actively participated in the doctor-patient relationship. They followed a patient from admission through diagnosis and treatment to discharge, or if death, through autopsy. “The New Orleans School thus pioneered the clinical clerkship in America, the modern method of clinical instruction that later came to be adopted by all American medical schools.”18 The University of Pennsylvania achieved another milestone in medical education in 1874 when it opened the doors to the University Hospital (94) on its new West Philadelphia campus. As conceived by William Pepper, Jr., MD, it was the first such academic-based hospital in the country, giving the medical school complete control over the clinical education of its students.

American medical education satisfied the aspirations of many who earned the degree and the right to practice, but not all. For physicians with the passion and financial resources to go abroad, they did so to supplement what they had learned in medical school lecture courses and preceptorships. During the first half of the 19th century, they headed to France, more specifically Paris. French medical institutions were paving new ground in medicine and medical education with their strong emphasis on clinical observation – compared to learning by lecture (repeatedly) in the American system.

In the 1840s Germany emerged as the center for physicians seeking to advance their training.19 They flocked there from the United States and countries throughout the world. The German medical community was also a scientific community, delving into experimental research. They did not just observe disease processes; they undertook critical thinking and experimentation in clinical and laboratory settings to understand them. The Germans also pioneered the development of clinical specialties, especially in the 1870s and ‘80s, a time when a new generation of post-Civil War American medical school graduates hungered for a level of training not yet available at home. For decades to come, even into the 1920s, thousands of young American physicians studied abroad, returning with ideas about medical research and/or specialization in areas like laryngology and ophthalmology. They would help American medical schools and medical education attain the much-anticipated higher level of excellence in the early 20th century.

This international influence is not to suggest that American medical schools did not play an important role in shaping their own destiny. Though attempts at reforms had failed in the earlier part of the 19th century, several of the most prominent medical schools succeeded in the 1870s. In 1871 Harvard extended its period of study to three, nine-month terms; required written examinations for each course; instituted a graded curriculum of basic sciences prior to clinical courses; and made laboratory science an important part of the curriculum. The 1877 reforms at Penn included: a three-year course of five-month terms (extended to six months in 1882); a graded curriculum; emphasis on the laboratory sciences; and examinations at the end of each course. In 1877 the University of Michigan extended its term to nine months; in 1880 it required a three-year graded course with emphasis on laboratory sciences. Of these three progressive medical schools, Michigan was the first to require a mandatory fourth year in 1890, an opportunity to expand clinical training. Other medical schools determined to remain competitive also adopted reforms.

A “casualty” of the reforms was the medical lecture ticket, which had prevailed at American medical schools for more than a century. As small schools consolidated into larger ones, and as universities centralized administration of its schools and made the medical faculty salaried professors, the system of lecture tickets became obsolete. Students paid a tuition bill as they do today. Matriculation tickets continued as proof of registration and payment of tuition, and schools may have issued cards confirming successful completion of a given course. However, the days of going to a professor’s home or office, or even a school office to purchase admission to individual courses of lectures ended. These tickets were few and far between by 1893 when The Johns Hopkins University School of Medicine opened with the principles of a modern medical school in place from its very first day.

In 1910 Abraham Flexner published Medical Education in the United States and Canada, commonly knows as the Flexner Report.20 He visited 156 medical schools and 12 graduate medical schools, evaluating entrance requirements, faculty size and qualifications, financial support, laboratory facilities and instructors, and hospital affiliations. Flexner recommended schools to consolidate or close, and some did. One analysis concluded that twelve (seven percent) of the 168 schools investigated by Flexner closed or merged as a result of his report.21 Yet, the reforms Flexner advocated had been in the pipeline of numerous medical schools throughout the late 19th century. Tougher standards for entrance and graduation, integration of basic science and clinical training into an expanded curriculum, university support of faculty, facilities and scientific research: change did not happen over night, but it happened. American medical education stood in a much stronger place as the 20th century dawned.

The outbreak of World War I cut off Americans’ access to German institutions for advanced medical education, although the numbers making that journey already had declined considerably as opportunities had improved at home.22 As the world recovered from war and Spanish influenza, American medical schools and research institutions like the Rockefeller Institute rose to the forefront of medical education and research. Instead of American physicians going abroad for innovative training, in time, those from abroad would come here. Moreover, early-20th-century American medical schools set the stage for a group of esteemed clinicians, scientists, and teachers, whose legacy of the healing arts continues to pass from generation to generation of emerging physicians.

7. Leslie B. Arey, Northwestern University Medical School 1859-1979: A Pioneer in Educational Reform (Chicago: Northwestern University Medical School, 1979), 6.

8. E.F. Horine, Daniel Drake (1785-1852): Pioneer Physician of the Midwest (Philadelphia: University of Pennsylvania Press, 1961), 79.

9. Norwood, William Frederick. Medical Education in the United States before the Civil War. Phila: University of Pennsylvania Press, 1944, 405.

10. George W. Corner, Two Centuries of Medicine: A History of the School of Medicine, University of Pennsylvania (Phila: J.B. Lippincott, 1965), 96.

11. Heber Chase, The Medical Student’s Guide: Being a Compendious View of the Collegiate and Clinical Medical Schools, the Courses of Private Lectures, the Hospitals and Almshouses, and Other Institutions which Contribute Directly or Indirectly to the Great Medical School of Philadelphia; With the Regulations of Hours, Fees, &c. and Other Information of Importance to Students (Phila: J.G. Auner, 1842).

12. Norwood, 389.

13. Ibid., 385.

14. N.S. Davis, MD. History of Medical Education and Institutions in the United States, from the First Settlement of the British Colonies to the Year 1850 (Chicago: S.C. Griggs & Co., 1851), 174.

15. Corner, 63.

16. Norwood, 422-25.

17. Davis, 169.

18. Kenneth M. Ludmerer, Learning To Heal: American Medical Education in the Mid-Nineteenth Century (NY: Basic Books, Inc., 1985) 21.

19. Ibid., 31-33.

20. Abraham Flexner, Medical Education in the United States and Canada: A Report to the Carnegie Foundation for the Advancement of Teaching (NY: Carnegie Foundation, 1910).

21. Mark D. Hiatt, MD, MS, MBA, and Christopher G. Stockton, MSM, The Impact of the Flexner Report on the Fate of Medical Schools in North America after 1909, http://www.jpands.org/vol8no2/hiattext.pdf.

22. Ludmerer, 32.